The Rise of the Strategic Architect

Posted By:

Minnesota PRSA Admin

Posted On: 2026-02-26T18:14:10Z

Beyond the Generalist: The Rise of the Strategic Architect

By Rico Paul Vallejos, CT

RicoLatino LLC

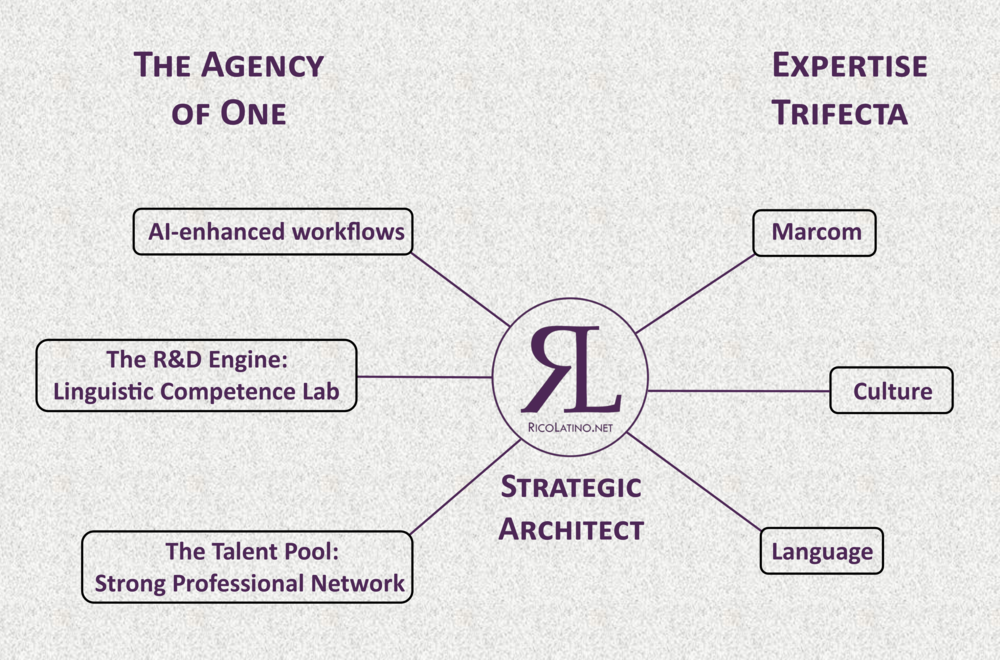

In the traditional communications landscape, solo practitioners have long been unfairly pigeonholed as generalists—jacks-of-all-trades whose versatility is mistaken for a lack of focus. While a solo pro’s portfolio might appear scattered to some, this multifaceted approach can represent a Perfect Trifecta: a deliberate convergence of deep expertise in specialized disciplines that creates a powerful competitive moat.

For my own practice, this Trifecta operates at the high-stakes intersection of marcom, language and culture. Specialized services, such as Conversational Spanish Coaching, are not side-hustles; they function as a Linguistic Competence Lab. This R&D arm ensures that multicultural marketing at RicoLatino remains nuanced and authentic, avoiding the pitfalls of surface-level translation. Even pro bono work adds to this dimension.

The modern Agency-of-One is no longer limited by headcount. By integrating AI-enhanced workflows, we deliver world-class results without the traditional overhead. However, solo is not synonymous with soloist. Using an ad hoc team model and networks like PRSA, I assemble specialized experts with surgical precision. This allows a practitioner to complement a client’s internal capacity with the agility of a boutique shop.

Finally, there is the Transparency Premium. While RicoLatino serves as the agency front, maintaining a personal presence at vallejos.net addresses a growing demand for direct accountability over opaque agency layers. We are entering the era of the Strategic Architect. By anchoring in core competencies and AI-powered networks, we provide the bridge that keeps brands relevant in a complex global market.

Email me